When building scalable and reliable applications, especially in microservices and cloud-native architectures, two terms often come up: API Gateway and Load Balancer.

At first glance, they may seem similar since both sit between the client and backend services. However, they solve different problems and often work together in modern systems.

In this tutorial, we’ll break down the differences, use cases, advantages, and limitations of each.

🔹 What is an API Gateway?

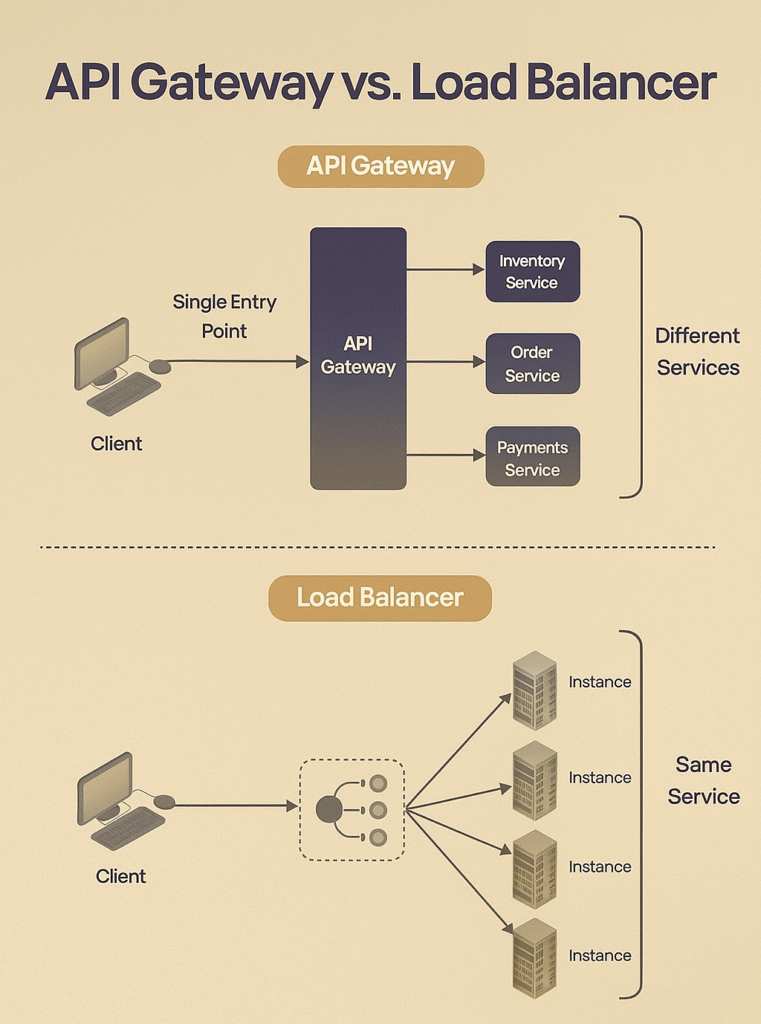

An API Gateway is the single entry point for all client requests in a microservices architecture.

Instead of allowing clients to directly call each microservice, the gateway acts as a centralized layer that manages, secures, and routes traffic.

It acts like a “smart postman”—receiving requests, verifying them, and routing them to the appropriate microservice.

🔹 Why Do We Need an API Gateway?

- Simplifies Client Interaction

- Clients don’t need to know about individual microservices.

- Instead of calling

/users-service,/orders-service,/payments-service, clients just call/api, and the Gateway routes internally.

- Centralized Security

- Authentication, authorization, and encryption policies are applied at one place.

- Prevents exposing every service directly to the internet.

- Decoupling Client from Backend

- If microservice endpoints or versions change, only the Gateway updates.

- Clients continue using the same unified API.

- Cross-Cutting Concerns

- Logging, monitoring, caching, and throttling are all handled centrally.

🔹 Key Features of an API Gateway

- Routing

- Decides which microservice should handle a request.

- Example:

/api/orders→ Order Service,/api/payments→ Payment Service.

- Authentication & Authorization

- Validates user credentials (API keys, JWT, OAuth tokens).

- Ensures only authorized clients access protected services.

- Rate Limiting / Quota Management

- Prevents abuse and DDoS attacks by limiting requests per client or per second.

- Example: Free tier users → 1000 requests/day; Premium users → Unlimited.

- Load Balancing

- Distributes traffic among multiple instances of a microservice.

- Example: If the Payment Service runs on 3 servers, the Gateway balances requests.

- Response Caching

- Stores frequent responses (e.g., top products list) to reduce backend load.

- Protocol Translation

- Converts requests and responses between protocols: REST ↔ gRPC, REST ↔ SOAP, HTTP ↔ WebSocket.

- Logging & Monitoring

- Tracks request/response metrics, latency, error rates, etc., for observability.

🔹 Pros & Cons of API Gateway

✅ Pros

- Simplified Client API – Clients talk to one endpoint.

- Centralized Security – Uniform authentication and access control.

- Supports Microservices – Decouples clients from backend complexity.

- Cross-Cutting Services – Logging, rate limiting, caching, monitoring.

❌ Cons

- Maintenance Overhead – Needs constant tuning and scaling.

- Single Point of Failure – If the gateway fails, all requests fail.

- Increased Complexity – More moving parts to maintain.

- Performance Bottleneck – Adds an extra network hop.

🖼 Example:

A client calls /orders, the API Gateway authenticates the request, checks rate limits, and then routes it to the Order Service.

🔹 What is a Load Balancer?

A Load Balancer is a networking component that sits between the client and the server pool. Its main role is to distribute incoming traffic across multiple servers that provide the same service.

Without a Load Balancer, a single server may become overwhelmed when too many requests arrive simultaneously. With a Load Balancer, traffic is intelligently divided, ensuring:

- Performance → users experience faster and more consistent response times

- Scalability → more servers can be added to handle more traffic.

- Availability → if one server fails, traffic is redirected to healthy servers.

📌 Key Analogy:

Think of a ticket counter at a movie theatre. Instead of everyone lining up at one counter, multiple counters are available. A supervisor (the Load Balancer) directs each new customer to the counter with the shortest line.

🔹 Why Do We Need a Load Balancer?

- Prevent Overloading → No single server is overwhelmed.

- Improve User Experience → Faster response times due to balanced load.

- Enable Horizontal Scaling → Easily add/remove servers without downtime.

- Fault Tolerance → If one server crashes, requests go to healthy servers.

- Disaster Recovery → Can route traffic to a backup data center if the primary fails.

🔹 How Load Balancers Work:

- Client Request → A user sends a request (e.g., open a website).

- Load Balancer Receives It → Instead of hitting a single server, the request first arrives at the Load Balancer.

- Health Check → The Load Balancer checks which servers are healthy and available.

- Routing Decision → It applies an algorithm (e.g., Round Robin, Least Connections) to choose a server.

- Forwarding Request → The request is forwarded to the chosen server.

- Response Back → The server processes the request and sends the response back to the client (sometimes directly, sometimes via the Load Balancer).

🔄 Load Balancing Algorithms:

- Round Robin: Requests are distributed sequentially.

- Least Connections: Sends traffic to the server with the fewest active connections.

- IP Hash: Routes requests based on client IP.

- Least Response Time: Sends traffic to the fastest responding server.

✅ 🔹 Advantages of Load Balancers

- ✅ Scalability – Easily add/remove servers without downtime.

- ✅ High Availability – Routes traffic only to healthy servers.

- ✅ Fault Tolerance – Handles failures automatically.

- ✅ Efficient Resource Use – Ensures no server is idle while others are overloaded

- Increases scalability and ensures high availability.

- Efficiently utilizes server resources.

- Provides fault tolerance.

🔹 Limitations of Load Balancers

- ❌ Single Point of Failure (if not configured in redundancy mode).

- ❌ Extra Latency – Requests pass through an additional network hop.

- ❌ Complexity – Requires careful configuration and monitoring.

- ❌ Cost – Managed Load Balancers (AWS/GCP/Azure) add billing overhead.

🔹 Types of Load Balancers

Load Balancers can operate at different layers of the OSI model:

- L4 Load Balancer (Transport Layer)

- Routes based on IP address & Port.

- Does not inspect the actual content of the request.

- Example: AWS Network Load Balancer (NLB), HAProxy in L4 mode.

- Best for: TCP/UDP traffic (gaming servers, video streaming).

- L7 Load Balancer (Application Layer)

- Routes based on application-level data (HTTP headers, URLs, cookies).

- Can make smart decisions like sending

/imagesrequests to one server and/apirequests to another. - Example: AWS Application Load Balancer (ALB), Nginx, Envoy.

- Best for: Web applications & microservices.

- DNS-Based Load Balancer

- Distributes requests by resolving DNS to different server IPs.

- Example: AWS Route 53, Cloudflare Load Balancer.

- Best for: Global traffic routing across multiple regions.

🔹 API Gateway vs Load Balancer – Key Differences

| Feature | API Gateway 📨 | Load Balancer ⚖️ |

|---|---|---|

| Purpose | Routes to different services | Routes to same service instances |

| Scope | Microservices management | Traffic distribution |

| Functions | Auth, rate limiting, caching, logging | Routing, health checks, fault tolerance |

| Layer | Application (L7) | Network (L4) or Application (L7) |

| Example | Kong, Apigee, AWS API Gateway | Nginx, HAProxy, AWS ALB/NLB |

🔹 When to Use Which?

Choosing between an API Gateway and a Load Balancer depends on the architecture, goals, and type of application you’re building. Let’s explore both in detail:

✅ Use API Gateway If:

- You are building a Microservices Architecture

- In a microservices setup, exposing each microservice (Order, Payment, Inventory, etc.) directly to clients is inefficient and insecure.

- An API Gateway provides a single unified entry point so clients don’t need to know the internal microservice structure.

- Example: In an e-commerce platform, the client just calls

/checkout, while the API Gateway internally routes requests to the Order Service, Payment Service, and Inventory Service.

- You need Authentication & Security

- Clients shouldn’t directly handle tokens or authentication with every microservice.

- The API Gateway centralizes JWT validation, OAuth, API keys, and enforces security policies before forwarding requests.

- Example: In a banking app, the API Gateway validates customer tokens and ensures only authorized requests reach the Accounts Service or Transactions Service.

- You need Rate Limiting or Quota Management

- To prevent abuse (like DDoS or excessive API calls), the API Gateway enforces rate limits per client or per API key.

- Example: In a SaaS product, the free tier is limited to 1000 requests/day, while the premium tier gets unlimited access—this is managed at the API Gateway level.

- You want Protocol Translation

- Sometimes clients speak REST, but services run on gRPC, SOAP, or WebSockets.

- The API Gateway converts requests/responses between protocols.

- Example: A mobile app communicates over REST, while backend services talk over gRPC—the gateway handles translation.

- You want Response Caching & Performance Optimization

- Frequently accessed results (e.g., top products list) can be cached at the gateway to reduce latency.

- Example: An online news platform caches the latest headlines at the API Gateway, avoiding repeated calls to backend services.

✅ Use Load Balancer If:

- You want to Scale Your Application Horizontally

- When a single server can’t handle all requests, multiple identical instances are deployed.

- The Load Balancer spreads traffic across them.

- Example: A social media app runs 50 web servers behind a Load Balancer—so millions of users can be served seamlessly.

- You need High Availability & Fault Tolerance

- Load Balancers perform health checks and stop sending traffic to failed servers.

- If one instance crashes, the Load Balancer routes traffic only to healthy ones.

- Example: In a video streaming platform, if one server goes down, the Load Balancer instantly redirects users to working servers—ensuring no downtime.

- You want Efficient Resource Utilization

- Load Balancers use algorithms (Round Robin, Least Connections, IP Hash) to evenly distribute requests.

- This ensures no server is overloaded while others remain idle.

- Example: An online exam portal during peak hours balances load across multiple servers so no single node slows down.

- You want Global Traffic Management

- Some load balancers work at DNS level (e.g., AWS Route 53, Cloudflare Load Balancer) to direct users to the nearest or healthiest region.

- Example: A global e-commerce site sends US users to US servers, EU users to EU servers—minimizing latency.

- You want to Enhance Network Performance

- Load Balancers (especially L7) can do SSL termination, request compression, and even Web Application Firewall (WAF) integration.

- Example: In a fintech app, SSL termination at the Load Balancer reduces encryption load on backend servers.

🔹 Putting It Together

- API Gateway = Smart traffic controller for different services

👉 Ideal for microservices, authentication, caching, protocol translation. - Load Balancer = Simple traffic distributor for same service instances

👉 Ideal for scaling, availability, resource efficiency.

📌 Best Practice: In most enterprise systems, you’ll use both together.

- The Load Balancer ensures requests are spread evenly across multiple server instances.

- The API Gateway ensures requests are secure, authenticated, and routed to the correct microservice.

💡 Example: Netflix Architecture

- API Gateway (Zuul, now Zuul 2 / Spring Cloud Gateway) → Handles routing, security, throttling.

- Load Balancer (Ribbon + AWS ELB) → Ensures millions of users are distributed across multiple backend servers efficiently.

📝 Conclusion

- An API Gateway is about managing APIs and microservices.

- A Load Balancer is about distributing traffic and ensuring availability.

Together, they form the backbone of modern cloud-native, microservices-driven architectures.

✅ Final Takeaway

- Use an API Gateway when you have multiple microservices and need a single entry point with security and routing.

- Use a Load Balancer when you have multiple instances of the same service and need scaling and high availability.

- In real-world enterprise systems → They complement each other for a robust, secure, and scalable architecture.